4 - Writing Machine Learning Scripts

In this section we will explore how to write a Machine Learning script that will help you making the best use of the cluster and apply good practices. You can find the full example at example.py.

4.1 - Parsing Arguments and Hyperparameters

When doing Machine Learning experiments, it's important to be able easily change the hyperparameters of the model and the training process. There are several options to achieve this, but we recommend using the argparse library, for general flags and parameters, and yaml, for model and training loop hyperparameters. The code below shows an example, whose full version you can find at example.py.

import argparse

import yaml

parser = argparse.ArgumentParser()

parser.add_argument("--config", type=str, default="config.yaml")

parser.add_argument("--debug", action="store_true")

parser.add_argument("--seed", type=int, default=42)

parser.add_argument("--num_workers", type=int, default=4)

parser.add_argument("--device", type=str, default="cuda:0")

args = parser.parse_args()

with open(args.config, "r") as f:

config = yaml.safe_load(f)4.2 - Logging

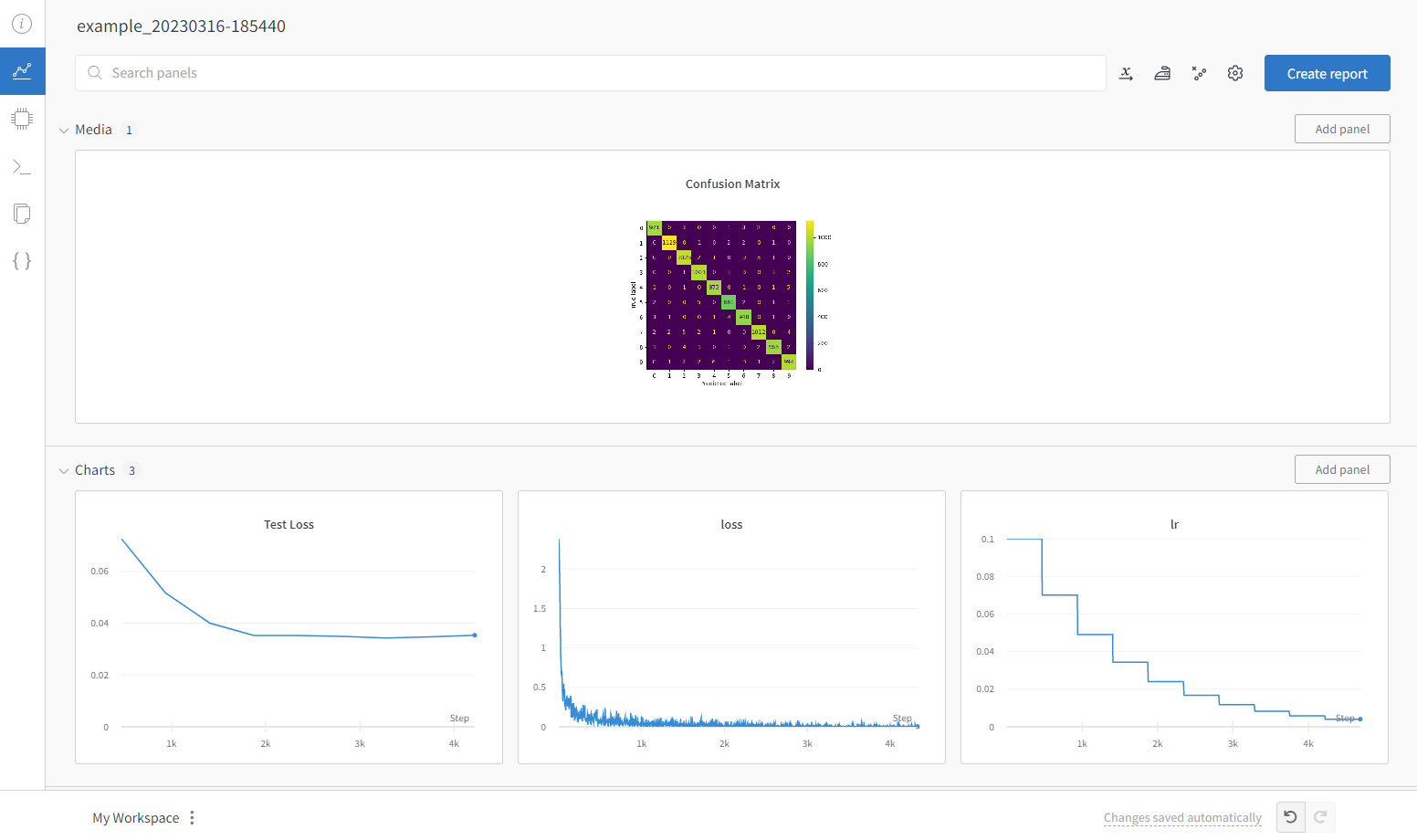

We recommend using logging tools like Weights & Biases (wandb) (opens in a new tab), Comet (opens in a new tab), and Tensorboard (opens in a new tab) to keep track of your experiments and results. They allow you to log metrics, hyperparameters, losses, tables, figures and much more of your machine learning experiments and also provide a web-based interface that helps you visualizing and analyzing different runs.

The figure below shows an example of the web interface of Weights & Biases (opens in a new tab) for the example.py script.

4.3 - Saving and Loading Checkpoints

It's a good practice to save checkpoints of your model during training. This way you can resume training from a checkpoint in case your training process is interrupted for some reason. You can also save multiple checkpoints during training, and select the best model according to some metric. You can save a checkpoint with the following code:

import torch

model = torch.nn.Linear(3, 1)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1)

checkpoint = {

"epoch": epoch,

"model": model.state_dict(),

"optimizer": optimizer.state_dict(),

"scheduler": scheduler.state_dict(),

}

torch.save(checkpoint, "checkpoint.pth")To load a checkpoint you can use the following code:

checkpoint = torch.load("checkpoint.pth")

model.load_state_dict(checkpoint["model"])

optimizer.load_state_dict(checkpoint["optimizer"])

scheduler.load_state_dict(checkpoint["scheduler"])4.4 - Seeds

It's a good practice to set the seeds of the random number generators of the libraries you are using. This way you can reproduce the results of your experiments. You can set the seeds with the following code:

import random

import numpy as np

import torch

def set_seed(seed):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed)However, it's important to note that numbers generated by the GPU are not deterministic by default, which will make your experiments non-reproducible. To enforce a deterministic behaviour you need to set the deterministic flag to True:. You can do it with the following code:

torch.backends.cudnn.deterministic = True4.5 - Testing Code with Jupyter Notebooks

When building a Machine Learning model, it's important to test your code before running it on the cluster. Jupyter Notebooks are a great tool to do this. They allow you to test a piece of code and visualize the results. You can find an example of a Jupyter Notebook at example.ipynb that tests the MNISTDataset.

4.6 - Type Hinting and Docstrings

Type hinting and docstrings are a good practice when writing code. They help you and other people to understand the code and make it easier to debug. Additionally, in Machine Learning scripts, it can be useful to document variables with their shape and type.

Example of a function with type hinting and docstrings:

import torch

def add(a: torch.tensor, b: torch.tensor) -> int:

"""Adds two torch tensors.

Args:

a (torch.tensor): First number.

b (torch.tensor): Second number.

Returns:

int: Sum of a and b.

"""

return a + bYou can find an example of typehinting and docstrings at example.py.

Useful links:

4.7 Git

You should always keep track of the different versions of your code using a version control system like Git (opens in a new tab), which will enable you to reproduce the results of your experiments and track changes that may have introduced bugs.